Machine learning algorithms struggle with noisy data, mistaking it for patterns. This can lead to poor performance. Techniques like auto-encoders, PCA, and filtering in the frequency domain can help clean data by removing noise and keeping the important signal.

Real-world data, which is the input of data mining algorithms, has several components that can impact it. Among those issues, the presence of noise is a significant factor. It is an unavoidable problem, but one that a company that relies on data must address.

Humans are likely to make mistakes during the collection of data,while instruments that collect data can be inaccurate, resulting in dataset errors. The errors are known as noise. Without the proper training,data noise can create issues in machine learning algorithms, as the algorithm thinks of that noise as a pattern and can start generalizing from it.

The image below shows how different types of noise can impact datasets.

Source: sci2s.ugr.es

Source: sci2s.ugr.es

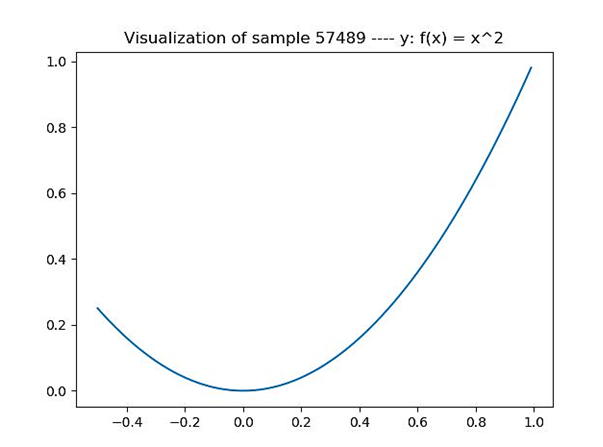

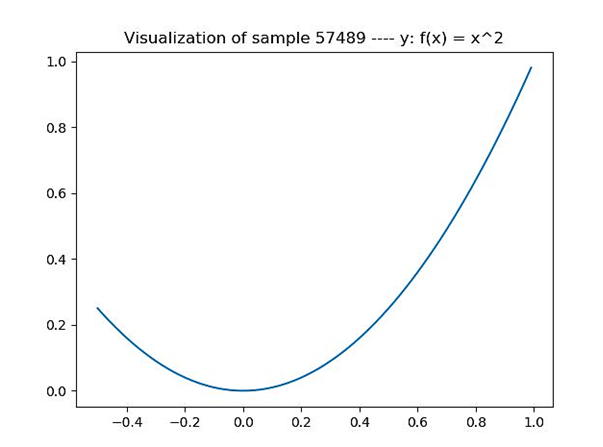

Thus, a noisy dataset can destroy the quality of the entire analysis pipeline. Analysts and data scientists will measure noise as a signal to noise ratio. The effect of noise on a signal of is shown as below.

Source: machinecurve.com

Source: www.machinecurve.com

Source: www.machinecurve.com

Therefore, any data scientist needs to tackle the noise in the dataset when using any algorithm.

Techniques to Remove Noise from Signal/Data in Machine Learning

In this article, we will see what steps we can take in machine learning to improve the quality dataset by removing the noise from it. There are several widespread techniques to remove noise from any signal or dataset.

Deep De-noising Auto encoding Method

Auto-encoders are useful for the de-noising purpose; it is a stochastic version of auto-encoder. As it is possible to make them learn the distinctive noise from the signal or data, thus they can be served as de-noisers by providing the noisy data as input and getting clean data as output.Auto-encoders contains two parts: one is an encoder that encodes input data into encoded form, second is a decoder, which decodes the encoded state.

The main idea behind de-noising auto-encoders is to force the hidden layer to learn additional robust features. Then we train the auto-encoder to recreate the input data from the degraded version of it by minimizing the loss. One example shows how using auto-encoders can remove noise from the signal.

Instinctively, a de-noising auto-encoder provides the two significant things: it encodes the input by preserving the most information about the data. It also undoes the outcome of noise stochastically applied to the input data.

PCA (Principal Component Analysis)

PCA is an arithmetical procedure that uses the orthogonal property to transform the set of feasibly correlated variables (linked variables) into those variables which are not associated (uncorrelated). These new variables are called “principal components.” PCA aims to remove corrupted data by preservative noise from the signal or image while preserving the essential features. This approach is both geometric and statistical; PCA projects the input data along various axes; thus, it lessens the input signal dimension or data. To understand more, you can consider it as when you project a point in the XY dimension along X-axis. Now, you can eliminate the noise plane – Y-axis. This whole phenomenon is also known as “dimensionality reduction.” Therefore, principal component analysis can reduce noise from input data by removing those axes containing the noisy data. This paper uses the PCA in removing noise from data in two stages by taking noisy data as input and providing the de-noised data in the output.

Fourier Transform Technique

Studies have shown that we can eliminate noise directly from our signal or data if it has a structure. In this approach, we convert the signal into the frequency domain by taking the Fourier Transform of the signal. We will not see this effect in raw signal or data, but if you break your signal into a frequency domain, it is apparent that most of the signal information in the time domain represents itself in just a few frequencies.Noise is random so that it will be spread out through all of the frequencies. The theory implies that we can sieve out most of the noisy data by holding the frequencies containing the most crucial signal information and discarding the remaining frequencies. In this way, it is possible to eliminate noisy signals from the dataset.

Using a Contrastive Dataset

Suppose it is needed to clean a noisy dataset that contains major background trends as noise that are not of interest toa data scientist. Then this technique provides the solution for it by removing the noisy signal using an adaptive noise cancellation strategy. This technique uses the two signals, one is the target signal, and the other is a background signal that only has noise.By removing the background signal, we can estimate the uncorrupted signal.

How does Google reduce noise?

In a recent paper, Google AI researchers show a show of dealing with noisy data challenges. The method, using Bregman divergences, is superior to a related two-temperature method using the Tsallis divergence.

The model shows significant potential to handle large-margin outliers and small-margin mislabeled data. The research shows it has a practical use on a wide variety of image classification tasks.

More information on the research is available here.

Summary

Separating the signal from noise is a significant concern for data scientists these days because it can cause performance issues, including overfitting affecting the machine learning algorithm behaving differently. An algorithm can take noise as a pattern and can start generalizing from it. So the best possible solution is to remove or reduce the noisy data from your signal or dataset. There are diverse methods suggested to lever the noisy data problem. Using features selection and dimensionality reduction techniques described above can help us to solve this problem.

Source:

Source:

Source:

Source: